How Did America Become a Christian Nation?

These days, there’s lots of talk about how America isn’t really a Christian nation; or how, as a nation of immigrants, all faiths are equally represented. But we know that this isn’t the case.

How exactly did America become a Christian nation? Let’s find out...

The Religion of the Founding Fathers

The immigrants who came to America from European countries were, as a rule, Christian. The major differences were in terms of Christian denominations, such as different churches rather than differences of actual faith. For instance, practically all of the original immigrants believed in Jesus, God, the Bible, and so on.

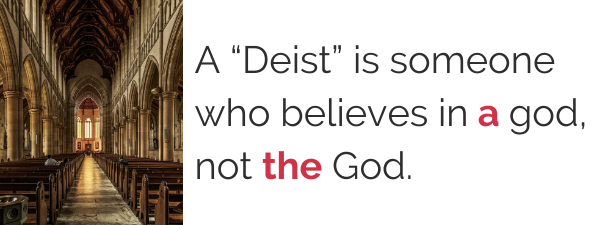

Naturally, the Founding Fathers themselves were all different types of practicing Christians. Although you might hear different stories about how Thomas Jefferson or many of the Founding Fathers were “deists”, this is classic historical revisionism.

Rest assured that Thomas Jefferson and other Founding Fathers were not deists in the practical sense of the term. They did, in many cases, have (for the time) progressive or liberal ideas concerning religion, which is part of why the Constitution has so many religious freedoms today.

Still, it’s inarguable that the Founding Fathers themselves were Christian. After all, both the Declaration of Independence and the Constitution have Christian terminology and phrases within them.

The Constitution and its Religious Protections

Speaking of the Constitution, it allows freedom of religion as part of the Bill of Rights. This, in effect, prevents federal or state governments from discriminating against people because of their religion or lack of religion. This allows people to practice any faith they please, so long as that faith and its rituals don’t infringe on the rights of others.

Thus, some people believe this indicates that America is not a Christian nation. If you can practice Islam, for instance, or Buddhism, how can America be Christian?

What Does a "Christian Nation" Really Mean?

In our view, a “Christian Nation” is one that has a majority of citizens that are practitioners of Christianity to one degree or another. Especially in a democracy like the United States, this will have inevitable effects on how legislation is drafted, what bills are voted in the law, and how the culture of the nation develops.

According to the most recent polls, America is still approximately 65% Christian. In other words, the majority of people living here believe in Jesus Christ. So it’s no real surprise when the dominant cultural views reflect this faith.

For instance, many people still believe in the value of the traditional family home, don’t support abortion, and so on. Of course, there’s no writing whatsoever in any of the federal documents explicitly stating that the US is a Christian nation, but there doesn’t need to be.

![]()

So, in summary, America became a Christian nation because it was the religion held by the immigrants who came here centuries ago. Even today, most of us still carry on their faith.

Although the Constitution protects religious freedom for everyone, the truth is that America is still inhabited by a majority of Christian citizens.

Justin

Author